At Bearer, we often get asked: “How do you detect and classify data just by scanning the source code?” To answer that, we need to answer two important questions:

- Why Static Code Analysis (SCA) is a relevant approach for data mapping.

- How we detect and classify data by scanning code repositories.

Why Static Code Analysis

Mapping the data flows in your software is the first step to ensure your data security and privacy policies are implemented properly.

We come from the API monitoring world, so our first thought process involved the use of in-app agents to catch application traffic in real-time and monitor data flows. This approach has a few big drawbacks:

- Agents need to be configured across every application and service in the organization.

- They run in production, and they can conflict with other libraries.

- They may add security vulnerabilities (hello Log4j 👋).

Long story short, our users were very reluctant to install agents. We really can’t blame them, so we started exploring other options. That led us to the approach we use today. We needed to break this first step down further: before we can map the movement of data, we need to identify where it lives.

Static Code Analysis (SCA) is the process of reading the source code without actually running it. It is notably used by security teams for Static Application Security Testing (SAST) to identify code issues, security vulnerabilities, and violations of company policies. In our eyes, SCA brought four clear benefits to security teams:

- Cost and time savings: They could deploy Bearer in less than 30 minutes.

- Security: They could use Bearer without giving us direct access to their codebase, through an on-premise Broker. We also don’t need to scan the actual data in databases, like some tools.

- Continuous improvement: They could use Bearer to embed data flow mapping and risk assessment within the entire software development lifecycle (the DevSecOps approach).

- Scalability: SCA adapts well to large and complex codebases, contrary to agents and proxies.

Our main question was: can we detect and classify data with sufficient accuracy just by scanning the code? Our SCA journey these past months leads to a strong YES. Here is the history of our experiments 👇

Our Static Code Analysis journey

By scanning the source code, we aim to:

- Detect the engineering components that make up your product (repositories, databases, third-party APIs, etc.)

- Detect and classify the data processed by these engineering components.

This blog post will focus on component detection. We will dive more into the data detection and classification in future articles.

Proof of Concept: API detection within repositories

The first challenge of the security teams we talked to was to map data flows in an API-first environment. The increasing number of internal microservices and third-party APIs made it very difficult to keep visibility. So we built a proof of concept to detect internal and external APIs within code repositories.

Start with the basics

We started by using git grep to detect regular expressions that could match the patterns of APIs, like https:// or http://. It is quick and efficient to search within git projects.

We detected domain names in files along with the filename and the line number. However, we got a significant amount of false-positive out of this process.

For instance, the httparty gem returned:

You can probably see the problem already. Common documentation and testing files flood the results with information that isn't important to us.

Ruby, our dear friend

At Bearer, we love Ruby because of Rails and also because of its scripting capabilities. We used it to improve our detection quality over our low-tech grep approach.

We explicitly excluded files that were not part of the runtime logic of the application, like Gemfile, READMEs, or the spec folder. We also removed comments from results, considering every line that starts with // or # to be a comment.

Language detection

This works, but it’s kind of a one-size-fits-all solution that can become bloated and fragile as we support more languages. That’s why we made it more language-specific.

This meant we could further refine our parsing based on the language we detected. Instead of catch-all file and path names, we were able to build lists that better match each language’s implementation details. This keeps the rules more modular, and allows us to expand to new language parsing more easily. We use the excellent Linguist gem to return the primary language of a repository. That allows us to apply a pattern globally and not only at the file level.

False negatives

What about false negatives? We started to label new unknown projects to assess our precision and our recall. Both ended up high, which meant that our detection was of good quality, but we were missing out on a lot of records.

Searching for the http:// pattern wasn’t working well, as you can imagine if you’ve ever dealt with APIs across different languages. For instance, we would miss the StackExchange API here:

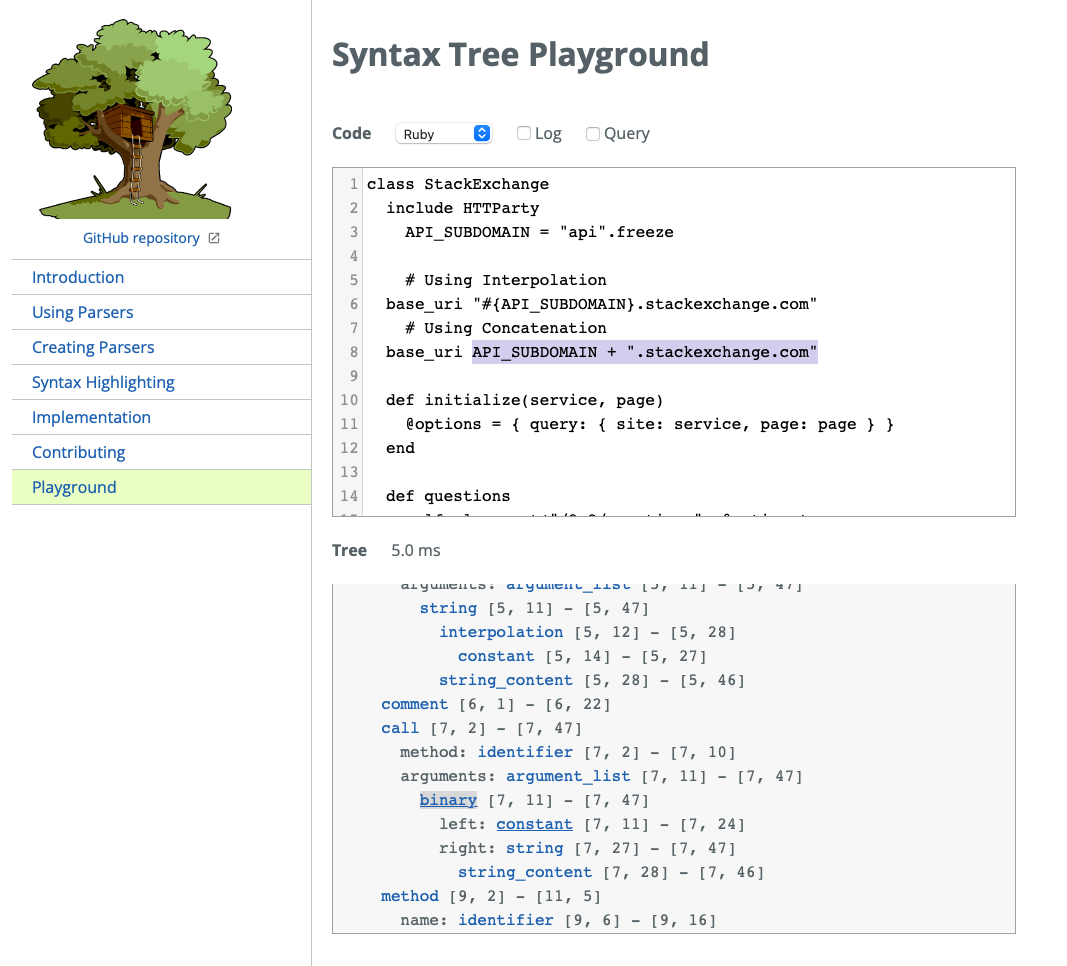

Domains could be even more obfuscated using interpolation or concatenation. Again, we would not detect it in the following example:

Clearly our implementation wasn’t working, so we needed a solution that would help us catch all the odd ways that our user’s code might be interacting with external URLs.

Walking the tree

That’s when we decided to use an Abstract Syntax Tree. An AST is a tree representation of the source code that conveys its structure. Each node in the syntax tree represents a construct from the source code.

We started playing with Tree-sitter, a tree generator an incremental parsing library. It can build a concrete syntax tree from a source file and efficiently update the syntax tree as the source file is edited. Tree-sitter aims to be general-purpose, fast, robust enough to give us the details we need, and dependency-free.

If we provide Tree-sitter with our StackExchange example from earlier, we can better understand how it turns code into a tree.

In the case of the concatenation, we see a string_content node which is a child of binary Node. The idea is to join the 2 nodes so that we see it in a format like this:

To achieve this, we annotate the tree—to add information that is important to us about how the nodes work together. Here is an example in Go:

That allows us to get the value of each node without losing the contextual information. We can process the parts to build *.stackexchange.com. With that working, we then understood the context of where a string has been used. We could then look at interpolation, concatenation, better comment support, and hash keys. Now we were capturing all the possible instances of what may or may not be a URL. No more false negatives! Better to know something is incorrect than never know it existed, right?

Classification, the secret sauce

Now we have the opposite problem: low recall, but low precision too. In a nutshell, we collect too many strings. Even if we apply heuristics to limit the number of records we receive, some go through.

For example, are we matching <something>.<something> or https://<something>.<something>, or something else entirely?

This is where we introduced a classification step. We won’t dive too deep into this process in this post, but it started the hard way. We processed thousands of repositories, public and private, and we analyzed all the strings we detected—with a spreadsheet.

We started with a subset of all the records we had and put together a list of heuristics to help us and make it as automatic as possible. What started as a low certainty score slowly rose to the point where we can have pretty high confidence on any of the thousands of records stored.

The set of heuristics led to around 20 steps to get results we are happy with. We also use these results to improve our database of known third-party APIs to help us get even better results and provide additional contexts for all future scans.

But that’s not all

This is only one part of what we do. The source code is full of information. For instance, many consumers of APIs rely on SDKs provided by the service rather than interact with the API directly. In some other cases, you might store URLs in environment variables.

Another interesting challenge involves microservices. Sometimes the appealing parts are not really the domains, but the paths. Let’s say we detect gateway.internal-domain.com. That’s good, but is that helpful? Not really. We want to know what’s behind it. For example, take these two instances:

- gateway.internal-domain.com/checkout

- gateway.internal-domain.com/products

Here, /checkout goes to one service and /products goes to another one. These are the parts that we care about when assessing the context of a URL. How do we solve this problem? We parse more, especially things like OpenAPI definitions, and continue the work on improving path detections and how they relate to one another.

Where we go from here

The original question was “How are you able to detect data flows from our source code?” and as you might have noticed, we haven’t talked about data at all.

That’s because the first step to mapping the data flow is to understand both ends. In other words, the engineering components that can process the data. We start by detecting the components, then focus on how they connect. We’ve talked almost exclusively about APIs, but we also work to detect additional resources like data stores, repositories, micro-services, and more. If data is processed or stored, we aim to detect it and to do that we need to detect each component that uses the data.

This detection and classification is only part of the story. We will talk about data type detection in future articles, so stay tuned!